Archimedes

................................................................................................................

A Brief History of Computers

Where did these beasties come from?

|

THE FUTURE :-

Ray Kurzweil's Predictions Persist - Northrop Grumman

Kelly McSweeney

Jan 31st 2020

Ray Kurzweil’s Predictions Persist

If Ray Kurzweil predictions continue to come true, machines will be smarter than humans in just a few years. Kurzweil famously predicted that the technological singularity — the crucial moment when machines become smarter than humans — will occur in our lifetime. Though this may seem incredible, he has made many outrageous predictions over the years with an astounding 86 percent success rate, according to Futurism. Regardless of how his remaining predictions will turn out, his theories have shaped modern science and technology.

A Nostradamus for the Digital Age

Although Kurzweil is known as a futurist, he is also an inventor. Some of his inventions include “the first CCD flatbed scanner, the first omnifont optical character recognition, the first print-to-speech reading machine for the blind, the first text-to-speech synthesizer … and the first commercially marketed large-vocabulary speech recognition,” according to Kurzweil Accelerating Intelligence.

As Google’s director of engineering, he leads a team developing machine intelligence and natural language understanding, according to Quartz. On top of this, he has written multiple books and owns various companies. His views and research have shaped the fields of artificial intelligence, robotics, nanotechnology and biotechnology. Honors acknowledging his influence include the National Medal of Technology, induction into the National Inventors Hall of Fame, more than 18 honorary doctorates, honors from three U.S. presidents and a Technical Grammy Award. (See Kurzweil’s biography and CV on his website, kurzweilai.net.)

Ray Kurzweil’s Predictions

Given his past success, Kurzweil’s wild predictions about the future seem feasible. It is very hard to predict the future, but he points out that technology is actually quite predictable, since it has been progressing at a steady exponential rate.

In a 2005 TED Talk, he explained, “Information technologies double their capacity, price performance, bandwidth, every year. And that’s a very profound explosion of exponential growth.”

Kurzweil has made hundreds of predictions. At a time when the internet was just emerging, he was able to see the future lifestyle that we’re living today, where computers beat us at our own games, wireless communication is widespread and technology is embedded into our daily lives.

“By 2029, computers will have human-level intelligence,” Kurzweil said in an interview at SXSW 2017.

Many of his predictions are still outstanding. In a 2010 white paper posted on the Kurzweil Network, Kurzweil analyzed the accuracy of his predictions and adjusted some of the dates. We’ll have to wait to see if he’s right that software and nanobots will cure most diseases by 2030, a prediction that he reiterated in interviews with The National and Big Think.

Embracing the Technological Singularity

In physics, the singularity is a point where gravity is so intense that even space and time begin to break down (think of the Big Bang or a black hole). This has become a metaphor to describe what will happen when AI surpasses human intelligence. Kurzweil says this tipping point will happen by 2045, according to Futurism.

Once AI is smarter than humans, it will only continue to improve itself. While many people fear this future (Evil computers! Robots gone rogue! AI enslaving humans!), Kurzweil suggests that human beings will benefit by surpassing our biological limits for intelligence.

This technological singularity will be a pivotal shift that could be terrifying or thrilling, depending on your outlook. Kurzweil is an optimistic futurist, and his views have helped shift the perception of the technological singularity away from a science fiction horror scenario toward a better life where humans and machines merge.

In Kurzweil’s future, AI will be so smart that it will come up with ideas that mere humans can’t even comprehend. He suggests that this brilliant AI could solve all of our problems — including all medical problems.

Instead of being afraid that technology will turn on us, Ray Kurzweil is excited about the potential to expand our intelligence. In the future, the human mind won’t be limited to the biological brain. Someday —perhaps in 2045 — our most profound thoughts could be triggered by a nanobot and uploaded into the cloud for eternity.

Ray Kurzweil - Wikipedia

Ray Kurzweil

Ray Kurzweil | |

|---|---|

Kurzweil in 2005 | |

| Born | Raymond Kurzweil February 12, 1948 New York City, New York, U.S. |

| Alma mater | Massachusetts Institute of Technology (B.S.) |

| Occupation |

|

| Employer | |

| Spouse(s) | Sonya Rosenwald (m. 1975) |

| Children | 2; Ethan and Amy |

| Awards |

|

| Website | Official website |

Raymond Kurzweil (/ˈkɜːrzwaɪl/ KURZ-wyle; born February 12, 1948) is an American inventor and futurist. He is involved in fields such as optical character recognition (OCR), text-to-speech synthesis, speech recognition technology, and electronic keyboard instruments. He has written books on health, artificial intelligence (AI), transhumanism, the technological singularity, and futurism. Kurzweil is a public advocate for the futurist and transhumanist movements and gives public talks to share his optimistic outlook on life extension technologies and the future of nanotechnology, robotics, and biotechnology.

Kurzweil received the 1999 National Medal of Technology and Innovation, the United States' highest honor in technology, from President Clinton in a White House ceremony. He was the recipient of the $500,000 Lemelson-MIT Prize for 2001. He was elected a member of the National Academy of Engineering in 2001 for the application of technology to improve human-machine communication. In 2002 he was inducted into the National Inventors Hall of Fame, established by the U.S. Patent Office. He has received 21 honorary doctorates, and honors from three U.S. presidents. The Public Broadcasting Service (PBS) included Kurzweil as one of 16 "revolutionaries who made America" along with other inventors of the past two centuries. Inc. magazine ranked him #8 among the "most fascinating" entrepreneurs in the United States and called him "Edison's rightful heir".

Life, inventions, and business career

Early life

Kurzweil grew up in the New York City borough of Queens. He attended NYC Public Education Kingsbury Elementary School PS188. He was born to secular Jewish parents who had emigrated from Austria just before the onset of World War II. He was exposed via Unitarian Universalism to a diversity of religious faiths during his upbringing.[1][2] His Unitarian church had the philosophy of many paths to the truth – his religious education consisted of studying a single religion for six months before moving on to the next.[3] His father, Fredric, was a concert pianist, a noted conductor, and a music educator. His mother, Hannah was a visual artist. He has one sibling, his sister Enid.

Kurzweil decided he wanted to be an inventor at the age of five.[4] As a young boy, Kurzweil had an inventory of parts from various construction toys he had been given and old electronic gadgets he'd collected from neighbors. In his youth, Kurzweil was an avid reader of science fiction literature. At the age of eight, nine, and ten, he read the entire Tom Swift Jr. series. At the age of seven or eight, he built a robotic puppet theater and robotic game. He was involved with computers by the age of 12 (in 1960), when only a dozen computers existed in all of New York City, and built computing devices and statistical programs for the predecessor of Head Start.[5] At the age of fourteen, Kurzweil wrote a paper detailing his theory of the neocortex.[6] His parents were involved with the arts, and he is quoted in the documentary Transcendent Man[7] as saying that the household always produced discussions about the future and technology.

Kurzweil attended Martin Van Buren High School. During class, he often held onto his class textbooks to seemingly participate, but instead, focused on his own projects which were hidden behind the book. His uncle, an engineer at Bell Labs, taught young Kurzweil the basics of computer science.[8] In 1963, at age 15, he wrote his first computer program.[9] He created pattern-recognition software that analyzed the works of classical composers, and then synthesized its own songs in similar styles. In 1965, he was invited to appear on the CBS television program I've Got a Secret,[10] where he performed a piano piece that was composed by a computer he also had built.[11] Later that year, he won first prize in the International Science Fair for the invention;[12] Kurzweil's submission to Westinghouse Talent Search of his first computer program alongside several other projects resulted in him being one of its national winners, which allowed him to be personally congratulated by President Lyndon B. Johnson during a White House ceremony. These activities collectively impressed upon Kurzweil the belief that nearly any problem could be overcome.[13]

Mid-life

While in high school, Kurzweil had corresponded with Marvin Minsky and was invited to visit him at MIT, which he did. Kurzweil also visited Frank Rosenblatt at Cornell.[14]

He obtained a B.S. in computer science and literature in 1970 at MIT. He went to MIT to study with Marvin Minsky. He took all of the computer programming courses (eight or nine) offered at MIT in the first year and a half.

In 1968, during his sophomore year at MIT, Kurzweil started a company that used a computer program to match high school students with colleges. The program, called the Select College Consulting Program, was designed by him and compared thousands of different criteria about each college with questionnaire answers submitted by each student applicant. Around this time, he sold the company to Harcourt, Brace & World for $100,000 (roughly $748,000 in 2020 dollars) plus royalties.[15]

In 1974, Kurzweil founded Kurzweil Computer Products, Inc. and led development of the first omni-font optical character recognition system, a computer program capable of recognizing text written in any normal font. Before that time, scanners had only been able to read text written in a few fonts. He decided that the best application of this technology would be to create a reading machine, which would allow blind people to understand written text by having a computer read it to them aloud. However, this device required the invention of two enabling technologies—the CCD flatbed scanner and the text-to-speech synthesizer. Development of these technologies was completed at other institutions such as Bell Labs, and on January 13, 1976, the finished product was unveiled during a news conference headed by him and the leaders of the National Federation of the Blind. Called the Kurzweil Reading Machine, the device covered an entire tabletop.

Kurzweil's next major business venture began in 1978, when Kurzweil Computer Products began selling a commercial version of the optical character recognition computer program. LexisNexis was one of the first customers, and bought the program to upload paper legal and news documents onto its nascent online databases.

Kurzweil sold his Kurzweil Computer Products to Xerox, where it was known as Xerox Imaging Systems, later known as Scansoft, and he functioned as a consultant for Xerox until 1995. In 1999, Visioneer, Inc. acquired ScanSoft from Xerox to form a new public company with ScanSoft as the new company-wide name. Scansoft merged with Nuance Communications in 2005.

Kurzweil's next business venture was in the realm of electronic music technology. After a 1982 meeting with Stevie Wonder, in which the latter lamented the divide in capabilities and qualities between electronic synthesizers and traditional musical instruments, Kurzweil was inspired to create a new generation of music synthesizers capable of accurately duplicating the sounds of real instruments. Kurzweil Music Systems was founded in the same year, and in 1984, the Kurzweil K250 was unveiled. The machine was capable of imitating a number of instruments, and in tests musicians were unable to discern the difference between the Kurzweil K250 on piano mode from a normal grand piano.[16] The recording and mixing abilities of the machine, coupled with its abilities to imitate different instruments, made it possible for a single user to compose and play an entire orchestral piece.

Kurzweil Music Systems was sold to South Korean musical instrument manufacturer Young Chang in 1990. As with Xerox, Kurzweil remained as a consultant for several years. Hyundai acquired Young Chang in 2006 and in January 2007 appointed Raymond Kurzweil as Chief Strategy Officer of Kurzweil Music Systems.[17]

Later life

Concurrent with Kurzweil Music Systems, Kurzweil created the company Kurzweil Applied Intelligence (KAI) to develop computer speech recognition systems for commercial use. The first product, which debuted in 1987, was an early speech recognition program.

Kurzweil started Kurzweil Educational Systems (KESI) in 1996 to develop new pattern-recognition-based computer technologies to help people with disabilities such as blindness, dyslexia and attention-deficit hyperactivity disorder (ADHD) in school. Products include the Kurzweil 1000 text-to-speech converter software program, which enables a computer to read electronic and scanned text aloud to blind or visually impaired users, and the Kurzweil 3000 program, which is a multifaceted electronic learning system that helps with reading, writing, and study skills.

Kurzweil sold KESI to Lernout & Hauspie. Following the legal and bankruptcy problems of the latter, he and other KESI employees purchased the company back. KESI was eventually sold to Cambium Learning Group, Inc.

During the 1990s, Kurzweil founded the Medical Learning Company.[18]

In 1997, Ray Kurzweil was the chair of the board of Anthrocon.

In 1999, Kurzweil created a hedge fund called "FatKat" (Financial Accelerating Transactions from Kurzweil Adaptive Technologies), which began trading in 2006. He has stated that the ultimate aim is to improve the performance of FatKat's A.I. investment software program, enhancing its ability to recognize patterns in "currency fluctuations and stock-ownership trends."[19] He predicted in his 1999 book, The Age of Spiritual Machines, that computers will one day prove superior to the best human financial minds at making profitable investment decisions. In June 2005, Kurzweil introduced the "Kurzweil-National Federation of the Blind Reader" (K-NFB Reader)—a pocket-sized device consisting of a digital camera and computer unit. Like the Kurzweil Reading Machine of almost 30 years before, the K-NFB Reader is designed to aid blind people by reading written text aloud. The newer machine is portable and scans text through digital camera images, while the older machine is large and scans text through flatbed scanning.

In December 2012, Kurzweil was hired by Google in a full-time position to "work on new projects involving machine learning and language processing".[20] He was personally hired by Google co-founder Larry Page.[21] Larry Page and Kurzweil agreed on a one-sentence job description: "to bring natural language understanding to Google".[22]

He received a Technical Grammy on February 8, 2015, specifically for his invention of the Kurzweil K250.[23]

Kurzweil has joined the Alcor Life Extension Foundation, a cryonics company. In the event of his declared death, Kurzweil plans to be perfused with cryoprotectants, vitrified in liquid nitrogen, and stored at an Alcor facility in the hope that future medical technology will be able to repair his tissues and revive him.[24]

Personal life

Kurzweil is agnostic about the existence of a soul.[25] On the possibility of divine intelligence, Kurzweil has said, "Does God exist? I would say, 'Not yet.'"[26]

Kurzweil married Sonya Rosenwald Kurzweil in 1975 and has two children.[27] Sonya Kurzweil is a psychologist in private practice in Newton, Massachusetts, working with women, children, parents and families. She holds faculty appointments at Harvard Medical School and William James College for Graduate Education in Psychology. Her research interests and publications are in the area of psychotherapy practice. Kurzweil also serves as an active Overseer at Boston Children's Museum.[28]

He has a son, Ethan Kurzweil, who is a venture capitalist,[29] and a daughter, Amy Kurzweil, a cartoonist.[30][31]

Creative approach

Kurzweil said "I realize that most inventions fail not because the R&D department can’t get them to work, but because the timing is wrong—not all of the enabling factors are at play where they are needed. Inventing is a lot like surfing: you have to anticipate and catch the wave at just the right moment."[32][33]

For the past several decades, Kurzweil's most effective and common approach to doing creative work has been conducted during his lucid dreamlike state which immediately precedes his awakening state. He claims to have constructed inventions, solved difficult problems, such as algorithmic, business strategy, organizational, and interpersonal problems, and written speeches in this state.[14]

Books

Kurzweil's first book, The Age of Intelligent Machines, was published in 1990. The nonfiction work discusses the history of computer artificial intelligence (AI) and forecasts future developments. Other experts in the field of AI contribute heavily to the work in the form of essays. The Association of American Publishers awarded it the status of Most Outstanding Computer Science Book of 1990.[34]

In 1993, Kurzweil published a book on nutrition called The 10% Solution for a Healthy Life. The book's main idea is that high levels of fat intake are the cause of many health disorders common in the U.S., and thus that cutting fat consumption down to 10% of the total calories consumed would be optimal for most people.

In 1999, Kurzweil published The Age of Spiritual Machines, which further elucidates his theories regarding the future of technology, which themselves stem from his analysis of long-term trends in biological and technological evolution. Much emphasis is on the likely course of AI development, along with the future of computer architecture.

Kurzweil's next book, published in 2004, returned to human health and nutrition. Fantastic Voyage: Live Long Enough to Live Forever was co-authored by Terry Grossman, a medical doctor and specialist in alternative medicine.

The Singularity Is Near, published in 2005, was made into a movie starring Pauley Perrette from NCIS. In February 2007, Ptolemaic Productions acquired the rights to The Singularity Is Near, The Age of Spiritual Machines, and Fantastic Voyage, including the rights to film Kurzweil's life and ideas for the documentary film Transcendent Man,[7] which was directed by Barry Ptolemy.

Transcend: Nine Steps to Living Well Forever,[35] a follow-up to Fantastic Voyage, was released on April 28, 2009.

Kurzweil's book How to Create a Mind: The Secret of Human Thought Revealed, was released on Nov. 13, 2012.[36] In it Kurzweil describes his Pattern Recognition Theory of Mind, the theory that the neocortex is a hierarchical system of pattern recognizers, and argues that emulating this architecture in machines could lead to an artificial superintelligence.[37]

Kurzweil's latest book and first fiction novel, Danielle: Chronicles of a Superheroine, follows a girl who uses her intelligence and the help of her friends to tackle real-world problems. It follows a structure akin to the scientific method. Chapters are organized as year-by-year episodes from Danielle's childhood and adolescence.[38] The book comes with companion materials, A Chronicle of Ideas, and How You Can Be a Danielle that provide real-world context. The book was released in April 2019.[39]

In an article on his website kurzweilai.net, Ray Kurzweil announced his new book The Singularity Is Nearer for release in 2022.[40]

Movies

In 2010, Kurzweil wrote and co-produced a movie directed by Anthony Waller called The Singularity Is Near: A True Story About the Future, which was based in part on his 2005 book The Singularity Is Near. Part fiction, part non-fiction, the film blends interviews with 20 big thinkers (such as Marvin Minsky) with a narrative story that illustrates some of his key ideas, including a computer avatar (Ramona) who saves the world from self-replicating microscopic robots. In addition to his movie, an independent, feature-length documentary was made about Kurzweil, his life, and his ideas, called Transcendent Man.[7]

In 2010, an independent documentary film called Plug & Pray premiered at the Seattle International Film Festival, in which Kurzweil and one of his major critics, the late Joseph Weizenbaum, argue about the benefits of eternal life.[41]

The feature-length documentary film The Singularity by independent filmmaker Doug Wolens (released at the end of 2012), showcasing Kurzweil, has been acclaimed as "a large-scale achievement in its documentation of futurist and counter-futurist ideas” and “the best documentary on the Singularity to date."[42]

Views

The Law of Accelerating Returns

In his 1999 book The Age of Spiritual Machines, Kurzweil proposed "The Law of Accelerating Returns", according to which the rate of change in a wide variety of evolutionary systems (including the growth of technologies) tends to increase exponentially.[43] He gave further focus to this issue in a 2001 essay entitled "The Law of Accelerating Returns", which proposed an extension of Moore's law to a wide variety of technologies, and used this to argue in favor of John von Neumann's concept of a technological singularity.[44]

Stance on the future of genetics, nanotechnology, and robotics

Kurzweil was working with the Army Science Board in 2006 to develop a rapid response system to deal with the possible abuse of biotechnology. He suggested that the same technologies that are empowering us to reprogram biology away from cancer and heart disease could be used by a bioterrorist to reprogram a biological virus to be more deadly, communicable, and stealthy. However, he suggests that we have the scientific tools to successfully defend against these attacks, similar to the way we defend against computer software viruses. He has testified before Congress on the subject of nanotechnology, advocating that nanotechnology has the potential to solve serious global problems such as poverty, disease, and climate change. "Nanotech Could Give Global Warming a Big Chill".[45]

In media appearances, Kurzweil has stressed the extreme potential dangers of nanotechnology[11] but argues that in practice, progress cannot be stopped because that would require a totalitarian system, and any attempt to do so would drive dangerous technologies underground and deprive responsible scientists of the tools needed for defense. He suggests that the proper place of regulation is to ensure that technological progress proceeds safely and quickly, but does not deprive the world of profound benefits. He stated, "To avoid dangers such as unrestrained nanobot replication, we need relinquishment at the right level and to place our highest priority on the continuing advance of defensive technologies, staying ahead of destructive technologies. An overall strategy should include a streamlined regulatory process, a global program of monitoring for unknown or evolving biological pathogens, temporary moratoriums, raising public awareness, international cooperation, software reconnaissance, and fostering values of liberty, tolerance, and respect for knowledge and diversity."[46]

Health and aging

Kurzweil admits that he cared little for his health until age 35, when he was found to suffer from a glucose intolerance, an early form of type II diabetes (a major risk factor for heart disease). Kurzweil then found a doctor (Terry Grossman, M.D.) who shares his somewhat unconventional beliefs to develop an extreme regimen involving hundreds of pills, chemical intravenous treatments, red wine, and various other methods to attempt to live longer. Kurzweil was ingesting "250 supplements, eight to 10 glasses of alkaline water and 10 cups of green tea" every day and drinking several glasses of red wine a week in an effort to "reprogram" his biochemistry.[47] By 2008, he had reduced the number of supplement pills to 150.[25] By 2015 Kurzweil further reduced his daily pill regimen down to 100 pills.[48]

Kurzweil asserts that in the future, everyone will live forever.[49] In a 2013 interview, he said that in 15 years, medical technology could add more than a year to one's remaining life expectancy for each year that passes, and we could then "outrun our own deaths". Among other things, he has supported the SENS Research Foundation's approach to finding a way to repair aging damage, and has encouraged the general public to hasten their research by donating.[22][50]

Encouraging futurism and transhumanism

Kurzweil's standing as a futurist and transhumanist has led to his involvement in several singularity-themed organizations. In December 2004, Kurzweil joined the advisory board of the Machine Intelligence Research Institute.[51] In October 2005, Kurzweil joined the scientific advisory board of the Lifeboat Foundation.[52] On May 13, 2006, Kurzweil was the first speaker at the Singularity Summit at Stanford University in Palo Alto, California.[53] In May 2013, Kurzweil was the keynote speaker at the 2013 proceeding of the Research, Innovation, Start-up and Employment (RISE) international conference in Seoul.

In February 2009, Kurzweil, in collaboration with Google and the NASA Ames Research Center, announced the creation of the Singularity University training center for corporate executives and government officials. The University's self-described mission is to "assemble, educate and inspire a cadre of leaders who strive to understand and facilitate the development of exponentially advancing technologies and apply, focus and guide these tools to address humanity's grand challenges". Using Vernor Vinge's Singularity concept as a foundation, the university offered its first nine-week graduate program to 40 students in 2009.

Predictions

Past predictions

Kurzweil's first book, The Age of Intelligent Machines, presents his ideas about the future. Written from 1986 to 1989, it was published in 1990. Building on Ithiel de Sola Pool's "Technologies of Freedom" (1983), Kurzweil claims to have forecast the dissolution of the Soviet Union due to new technologies such as cellular phones and fax machines disempowering authoritarian governments by removing state control over the flow of information.[54] In the book, Kurzweil also extrapolates trends in improving computer chess software performance, predicting that computers would beat the best human players "by the year 2000".[55] In May 1997, IBM's Deep Blue computer defeated chess World Champion Garry Kasparov in a well-publicized chess match.[56]

Perhaps most significantly, Kurzweil foresaw the explosive growth in worldwide Internet use that began in the 1990s. At the time when The Age of Intelligent Machines was published, there were only 2.6 million Internet users in the world,[57] and the medium was unreliable, difficult to use, and deficient in content. He also stated that the Internet would explode not only in the number of users but in content as well, eventually granting users access "to international networks of libraries, data bases, and information services". Additionally, Kurzweil claims to have correctly foreseen that the preferred mode of Internet access would inevitably be through wireless systems, and he was also correct to estimate that this development would become practical for widespread use in the early 21st century.

In October 2010, Kurzweil released his report, "How My Predictions Are Faring" in PDF format,[58] analyzing the predictions he made in his book The Age of Intelligent Machines (1990), The Age of Spiritual Machines (1999) and The Singularity is Near (2005). Of the 147 predictions, Kurzweil claimed that 115 were "entirely correct", 12 were "essentially correct", 17 were "partially correct", and only 3 were "wrong". Combining the "entirely" and "essentially" correct, Kurzweil's claimed accuracy rate comes to 86%.

Daniel Lyons, writing in Newsweek magazine, criticized Kurzweil for some of his predictions that turned out to be wrong, such as the economy continuing to boom from the 1998 dot-com through 2009, a US company having a market capitalization of more than $1 trillion by 2009, a supercomputer achieving 20 petaflops, speech recognition being in widespread use and cars that would drive themselves using sensors installed in highways; all by 2009.[59] To the charge that a 20 petaflop supercomputer was not produced in the time he predicted, Kurzweil responded that he considers Google a giant supercomputer, and that it is indeed capable of 20 petaflops.[59]

Forbes magazine claimed that Kurzweil's predictions for 2009 were mostly inaccurate. For example, Kurzweil predicted, "The majority of text is created using continuous speech recognition." This was not the case.[60]

Future predictions

In 1999, Kurzweil published a second book titled The Age of Spiritual Machines, which goes into more depth explaining his futurist ideas. In it, he states that with radical life extension will come radical life enhancement. He says he is confident that within 10 years we will have the option to spend some of our time in 3D virtual environments that appear just as real as real reality, but these will not yet be made possible via direct interaction with our nervous system. He believes that 20 to 25 years from now, we will have millions of blood-cell sized devices, known as nanobots, inside our bodies fighting diseases, and improving our memory and cognitive abilities. Kurzweil claims that a machine will pass the Turing test by 2029. Kurzweil states that humans will be a hybrid of biological and non-biological intelligence that becomes increasingly dominated by its non-biological component.[citation needed] In Transcendent Man Kurzweil states "We humans are going to start linking with each other and become a metaconnection; we will all be connected and omnipresent, plugged into a global network that is connected to billions of people and filled with data."[7]

In 2008, Kurzweil said in an expert panel in the National Academy of Engineering that solar power will scale up to produce all the energy needs of Earth's people in 20 years. According to Kurzweil, we only need to capture 1 part in 10,000 of the energy from the Sun that hits Earth's surface to meet all of humanity's energy needs.[61]

Reception

Praise

Kurzweil was called "the ultimate thinking machine" by Forbes[62] and a "restless genius"[63] by The Wall Street Journal. PBS included Kurzweil as one of 16 "revolutionaries who made America"[64] along with other inventors of the past two centuries. Inc. magazine ranked him Number 8 among the "most fascinating" entrepreneurs in the US and called him "Edison's rightful heir".[65]

Criticism

Although technological singularity is a popular concept in science fiction, some authors such as Neal Stephenson[66] and Bruce Sterling have voiced skepticism about its real-world plausibility. Sterling expressed his views on the singularity scenario in a talk at the Long Now Foundation entitled The Singularity: Your Future as a Black Hole.[67][68] Other prominent AI thinkers and computer scientists such as Daniel Dennett,[69] Rodney Brooks,[70] David Gelernter[71] and Paul Allen[72] have also criticized Kurzweil's projections.

In the cover article of the December 2010 issue of IEEE Spectrum, John Rennie criticizes Kurzweil for several predictions that failed to become manifest by the originally predicted date. "Therein lie the frustrations of Kurzweil's brand of tech punditry. On close examination, his clearest and most successful predictions often lack originality or profundity. And most of his predictions come with so many loopholes that they border on the unfalsifiable."[73]

Bill Joy, cofounder of Sun Microsystems, agrees with Kurzweil's timeline of future progress, but thinks that technologies such as AI, nanotechnology and advanced biotechnology will create a dystopian world.[74] Mitch Kapor, the founder of Lotus Development Corporation, has called the notion of a technological singularity "intelligent design for the IQ 140 people...This proposition that we're heading to this point at which everything is going to be just unimaginably different—it's fundamentally, in my view, driven by a religious impulse. And all of the frantic arm-waving can't obscure that fact for me."[19]

Some critics have argued more strongly against Kurzweil and his ideas. Cognitive scientist Douglas Hofstadter has said of Kurzweil's and Hans Moravec's books: "It's an intimate mixture of rubbish and good ideas, and it's very hard to disentangle the two, because these are smart people; they're not stupid."[75] Biologist P.Z. Myers has criticized Kurzweil's predictions as being based on "New Age spiritualism" rather than science and says that Kurzweil does not understand basic biology.[76][77] VR pioneer Jaron Lanier has even described Kurzweil's ideas as "cybernetic totalism" and has outlined his views on the culture surrounding Kurzweil's predictions in an essay for edge.org entitled One Half of a Manifesto.[42][78] Physicist and futurist Theodore Modis claims that Kurzweil's thesis of a "technological singularity" lacks scientific rigor.[79]

British philosopher John Gray argues that contemporary science is what magic was for ancient civilizations. It gives a sense of hope for those who are willing to do almost anything in order to achieve eternal life. He quotes Kurzweil's Singularity as another example of a trend which has almost always been present in the history of mankind.[80]

The Brain Makers, a history of artificial intelligence written in 1994 by HP Newquist, noted that "Born with the same gift for self-promotion that was a character trait of people like P.T. Barnum and Ed Feigenbaum, Kurzweil had no problems talking up his technical prowess...Ray Kurzweil was not noted for his understatement."[81]

In a 2015 paper, William D. Nordhaus of Yale University takes an economic look at the impacts of an impending technological singularity. He comments, "There is remarkably little writing on Singularity in the modern macroeconomic literature."[82] Nordhaus supposes that the Singularity could arise from either the demand or supply side of a market economy, but for information technology to proceed at the kind of pace Kurzweil suggests, there would have to be significant productivity trade-offs. Namely, in order to devote more resources to producing super computers we must decrease our production of non-information technology goods. Using a variety of econometric methods, Nordhaus runs six supply-side tests and one demand-side test to track the macroeconomic viability of such steep rises in information technology output. Of the seven tests only two indicated that a Singularity was economically possible and both predicted at least 100 years before it would occur.

Awards and honors

- First place in the 1965 International Science Fair[12] for inventing the classical music synthesizing computer.

- The 1978 Grace Murray Hopper Award from the Association for Computing Machinery. The award is given annually to one "outstanding young computer professional" and is accompanied by a $35,000 prize.[83] Kurzweil won it for his invention of the Kurzweil Reading Machine.[84]

- In 1986, Kurzweil was named Honorary Chairman for Innovation of the White House Conference on Small Business by President Reagan.

- In 1987, Kurzweil received an Honorary Doctorate of Music from Berklee College of Music.[85]

- In 1988, Kurzweil was named Inventor of the Year by MIT and the Boston Museum of Science.[86]

- In 1990, Kurzweil was voted Engineer of the Year by the over one million readers of Design News Magazine and received their third annual Technology Achievement Award.[86][87]

- The 1995 Dickson Prize in Science

- The 1998 "Inventor of the Year" award from the Massachusetts Institute of Technology.[88]

- The 1999 National Medal of Technology.[89] This is the highest award the President of the United States can bestow upon individuals and groups for pioneering new technologies, and the President dispenses the award at his discretion.[90] Bill Clinton presented Kurzweil with the National Medal of Technology during a White House ceremony in recognition of Kurzweil's development of computer-based technologies to help the disabled.

- In 2000, Kurzweil received the Golden Plate Award of the American Academy of Achievement.[91]

- The 2000 Telluride Tech Festival Award of Technology.[92] Two other individuals also received the same honor that year. The award is presented yearly to people who "exemplify the life, times and standard of contribution of Tesla, Westinghouse and Nunn."

- The 2001 Lemelson-MIT Prize for a lifetime of developing technologies to help the disabled and to enrich the arts.[93] Only one is awarded each year – it is given to highly successful, mid-career inventors. A $500,000 award accompanies the prize.[94]

- Kurzweil was inducted into the National Inventors Hall of Fame in 2002 for inventing the Kurzweil Reading Machine.[95] The organization "honors the women and men responsible for the great technological advances that make human, social and economic progress possible."[96] Fifteen other people were inducted into the Hall of Fame the same year.[97]

- The Arthur C. Clarke Lifetime Achievement Award on April 20, 2009 for lifetime achievement as an inventor and futurist in computer-based technologies.[98]

- In 2011, Kurzweil was named a Senior Fellow of the Design Futures Council.[99]

- In 2013, Kurzweil was honored as a Silicon Valley Visionary Award winner on June 26 by SVForum.[100]

- In 2014, Kurzweil was honored with the American Visionary Art Museum's Grand Visionary Award on January 30.[101][102][103]

- In 2014, Kurzweil was inducted as an Eminent Member of IEEE-Eta Kappa Nu.

- Kurzweil has received 20 honorary doctorates in science, engineering, music and humane letters from Rensselaer Polytechnic Institute, Hofstra University and other leading colleges and universities, as well as honors from three U.S. presidents – Clinton, Reagan and Johnson.[104][105]

- Kurzweil has received seven national and international film awards including the CINE Golden Eagle Award and the Gold Medal for Science Education from the International Film and TV Festival of New York.[86]

- He gave a 2007 keynote speech to the protestant United Church of Christ in Hartford, Connecticut, alongside Barack Obama, who was then a Presidential candidate.[106][107]

Bibliography

Non-fiction

- The Age of Intelligent Machines (1990)

- The 10% Solution for a Healthy Life (1993)

- The Age of Spiritual Machines (1999)

- Fantastic Voyage: Live Long Enough to Live Forever (2004 - co-authored by Terry Grossman)

- The Singularity Is Near (2005)

- Transcend: Nine Steps to Living Well Forever (2009)

- How to Create a Mind (2012)

Fiction

- Danielle: Chronicles of a Superheroine (2019)

Early Man relied on counting on his fingers and toes (which by the way, is the

basis for our base 10 numbering system). He also used sticks and stones as markers.

Later notched sticks and knotted cords were used for counting. Finally came symbols

written on hides, parchment, and later paper. Man invents the concept of number,

then invents devices to help keep up with the numbers of his possessions.

Early Man relied on counting on his fingers and toes (which by the way, is the

basis for our base 10 numbering system). He also used sticks and stones as markers.

Later notched sticks and knotted cords were used for counting. Finally came symbols

written on hides, parchment, and later paper. Man invents the concept of number,

then invents devices to help keep up with the numbers of his possessions.

Gottfried Wilhelm von Leibniz invented differential and integral calculus independently

of Sir Isaac Newton, who is usually given sole credit. He invented a calculating machine

known as Leibniz’s Wheel or the Step Reckoner.

It could add and subtract, like Pascal’s machine, but it could also multiply and divide.

It did this by repeated additions or subtractions, the way mechanical adding machines of

the mid to late 20th century did. Leibniz also invented something essential to modern

computers — binary arithmetic.

Gottfried Wilhelm von Leibniz invented differential and integral calculus independently

of Sir Isaac Newton, who is usually given sole credit. He invented a calculating machine

known as Leibniz’s Wheel or the Step Reckoner.

It could add and subtract, like Pascal’s machine, but it could also multiply and divide.

It did this by repeated additions or subtractions, the way mechanical adding machines of

the mid to late 20th century did. Leibniz also invented something essential to modern

computers — binary arithmetic.

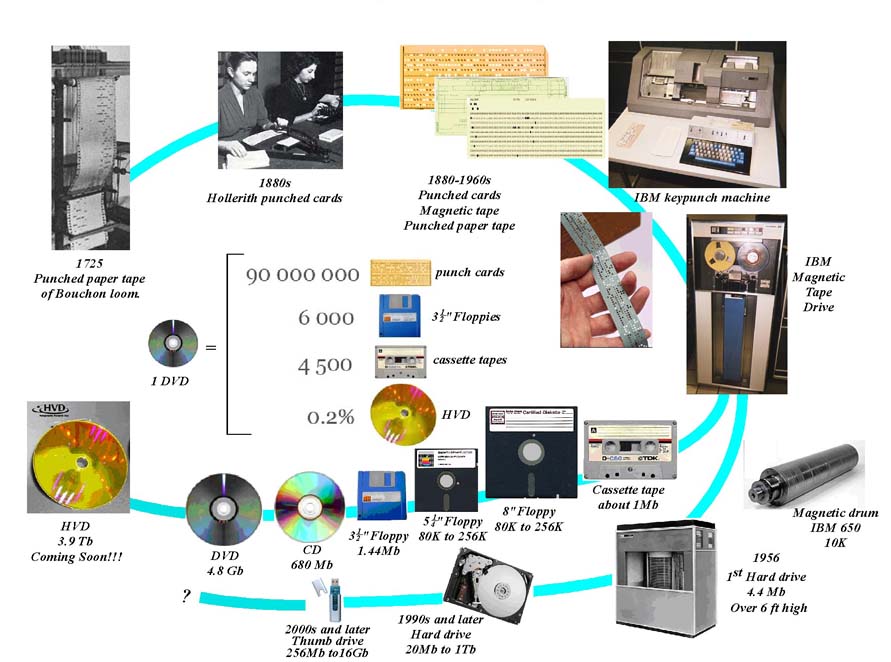

Basile Bouchon, the son of an organ maker, worked in the textile industry. At this time

fabrics with very intricate patterns woven into them were very much in vogue. To weave

a complex pattern, however involved somewhat complicated manipulations of the threads in

a loom which frequently became tangled, broken, or out of place.

Bouchon observed the paper rolls with punched holes that his father made to program his

player organs and adapted the idea as a way of "programming" a loom. The paper passed

over a section of the loom and where the holes appeared certain threads were lifted.

As a result, the pattern could be woven repeatedly. This was the first punched paper,

stored program. Unfortunately the paper tore and was hard to advance. So, Bouchon’s

loom never really caught on and eventually ended up in the back room collecting dust.

Basile Bouchon, the son of an organ maker, worked in the textile industry. At this time

fabrics with very intricate patterns woven into them were very much in vogue. To weave

a complex pattern, however involved somewhat complicated manipulations of the threads in

a loom which frequently became tangled, broken, or out of place.

Bouchon observed the paper rolls with punched holes that his father made to program his

player organs and adapted the idea as a way of "programming" a loom. The paper passed

over a section of the loom and where the holes appeared certain threads were lifted.

As a result, the pattern could be woven repeatedly. This was the first punched paper,

stored program. Unfortunately the paper tore and was hard to advance. So, Bouchon’s

loom never really caught on and eventually ended up in the back room collecting dust.

It took inventor Joseph M. Jacquard to bring together Bouchon’s idea of a continuous

punched roll, and Falcon’s ides of durable punched cards to produce a really workable

programmable loom. Weaving operations were controlled by punched cards tied together

to form a long loop. And, you could add as many cards as you wanted. Each time a

thread was woven in, the roll was clicked forward by one card. The results

revolutionized the weaving industry and made a lot of money for Jacquard.

This idea of punched data storage was later adapted for computer data input.

It took inventor Joseph M. Jacquard to bring together Bouchon’s idea of a continuous

punched roll, and Falcon’s ides of durable punched cards to produce a really workable

programmable loom. Weaving operations were controlled by punched cards tied together

to form a long loop. And, you could add as many cards as you wanted. Each time a

thread was woven in, the roll was clicked forward by one card. The results

revolutionized the weaving industry and made a lot of money for Jacquard.

This idea of punched data storage was later adapted for computer data input.

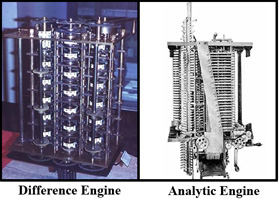

Charles Babbage is known as the Father of the modern computer (even though none of

his computers worked or were even constructed in their entirety). He first designed

plans to build, what he called the Automatic Difference Engine. It

was designed to help in the construction of mathematical tables for navigation.

Unfortunately, engineering limitations of his time made it impossible for the

computer to be built. His next project was much

more ambitious.

Charles Babbage is known as the Father of the modern computer (even though none of

his computers worked or were even constructed in their entirety). He first designed

plans to build, what he called the Automatic Difference Engine. It

was designed to help in the construction of mathematical tables for navigation.

Unfortunately, engineering limitations of his time made it impossible for the

computer to be built. His next project was much

more ambitious.

While a professor of mathematics at Cambridge University (where Stephen Hawkin is now),

a position he never actually occupied, he proposed the construction of a machine he called

the Analytic Engine. It was to have a punched card input, a memory unit

(called the store), an arithmetic unit (called the mill),

automatic printout, sequential program control, and 20-place decimal accuracy.

He had actually worked out a plan for a computer 100 years ahead of its time.

Unfortunately it was never completed. It had to wait for manufacturing technology to

catch up to his ideas.

While a professor of mathematics at Cambridge University (where Stephen Hawkin is now),

a position he never actually occupied, he proposed the construction of a machine he called

the Analytic Engine. It was to have a punched card input, a memory unit

(called the store), an arithmetic unit (called the mill),

automatic printout, sequential program control, and 20-place decimal accuracy.

He had actually worked out a plan for a computer 100 years ahead of its time.

Unfortunately it was never completed. It had to wait for manufacturing technology to

catch up to his ideas.

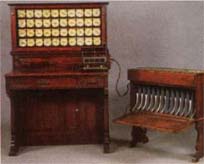

The computer trail next takes us to, of all places, the U.S. Bureau of Census. In 1880

taking the U.S. census proved to be a monumental task. By the time it was completed it

was almost time to start over for the 1890 census. To try to overcome this problem the

Census Bureau hired Dr. Herman Hollerith. In 1887, using Jacquard’s idea of the punched

card data storage, Hollerith developed a punched card tabulating system, which allowed

the census takers to record all the information needed on punched cards which were then

placed in a special tabulating machine with a series of counters. When a lever was pulled

a number of pins came down on the card. Where there was a hole the pin went through the

card and made contact with a tiny pool of mercury below and tripped one of the counters by

one. With Hollerith’s machine the 1890 census tabulation was completed in 1/8 the time.

And they checked the count twice.

The computer trail next takes us to, of all places, the U.S. Bureau of Census. In 1880

taking the U.S. census proved to be a monumental task. By the time it was completed it

was almost time to start over for the 1890 census. To try to overcome this problem the

Census Bureau hired Dr. Herman Hollerith. In 1887, using Jacquard’s idea of the punched

card data storage, Hollerith developed a punched card tabulating system, which allowed

the census takers to record all the information needed on punched cards which were then

placed in a special tabulating machine with a series of counters. When a lever was pulled

a number of pins came down on the card. Where there was a hole the pin went through the

card and made contact with a tiny pool of mercury below and tripped one of the counters by

one. With Hollerith’s machine the 1890 census tabulation was completed in 1/8 the time.

And they checked the count twice.

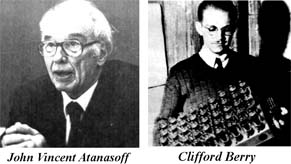

Dr. John Vincent Atanasoff and his graduate assistant, Clifford Barry, built the first truly

electronic computer, called the Atanasoff-Berry Computer or ABC. Atanasoff said the idea

came to him as he was sitting in a small roadside tavern in Illinois. This computer used a

circuit with 45 vacuum tubes to perform the calculations, and capacitors for storage. This

was also the first computer to use binary math.

Dr. John Vincent Atanasoff and his graduate assistant, Clifford Barry, built the first truly

electronic computer, called the Atanasoff-Berry Computer or ABC. Atanasoff said the idea

came to him as he was sitting in a small roadside tavern in Illinois. This computer used a

circuit with 45 vacuum tubes to perform the calculations, and capacitors for storage. This

was also the first computer to use binary math.

In 1944 Dr. Howard Aiken of Harvard finished the construction of the Automatic Sequence

Controlled Calculator, popularly known as the Mark I. It contained over 3000 mechanical relays

and was the first electro-mechanical computer capable of making logical decisions, like

if x==3 then do this not like If its raining outside I need to carry

an umbrella. It could perform an addition in 3/10 of a second. Compare that with

something on the order of a couple of nano-seconds (billionths of a second) today.

In 1944 Dr. Howard Aiken of Harvard finished the construction of the Automatic Sequence

Controlled Calculator, popularly known as the Mark I. It contained over 3000 mechanical relays

and was the first electro-mechanical computer capable of making logical decisions, like

if x==3 then do this not like If its raining outside I need to carry

an umbrella. It could perform an addition in 3/10 of a second. Compare that with

something on the order of a couple of nano-seconds (billionths of a second) today.

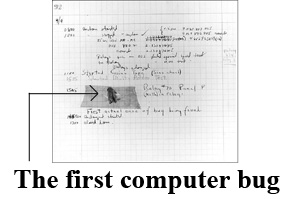

One of the primary programmers for the Mark I was Grace Hopper. One day the Mark I was

malfunctioning and not reading its paper tape input correctly. Ms Hopper checked out the

reader and found a dead moth in the mechanism with its wings blocking the reading of the

holes in the paper tape. She removed the moth, taped it into her log book, and

recorded... Relay #70 Panel F (moth) in relay. First actual case of bug being found.

One of the primary programmers for the Mark I was Grace Hopper. One day the Mark I was

malfunctioning and not reading its paper tape input correctly. Ms Hopper checked out the

reader and found a dead moth in the mechanism with its wings blocking the reading of the

holes in the paper tape. She removed the moth, taped it into her log book, and

recorded... Relay #70 Panel F (moth) in relay. First actual case of bug being found.

In 1948 an event occurred that was to forever change the course of computers and

electronics. Working at Bell Labs three scientists, John Bordeen (1908-1991) (left),

Waltar Brattain (1902-1987) (right), and William Shockly (1910-1989) (seated) invented

the transistor.

In 1948 an event occurred that was to forever change the course of computers and

electronics. Working at Bell Labs three scientists, John Bordeen (1908-1991) (left),

Waltar Brattain (1902-1987) (right), and William Shockly (1910-1989) (seated) invented

the transistor.

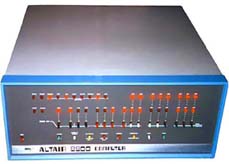

In 1981, IBM produced their first microcomputer. Then the clones started to appear.

This microcomputer explosion fulfilled its slogan computers by the millions

for the millions.

Compared to ENIAC, microcomputers of the early 80s:

In 1981, IBM produced their first microcomputer. Then the clones started to appear.

This microcomputer explosion fulfilled its slogan computers by the millions

for the millions.

Compared to ENIAC, microcomputers of the early 80s:

No comments:

Post a Comment